The Road to Strong AI (According to Judea Pearl)

October 28, 2018

This post is a brief summary of Judea Pearl’s paper “Theoretical Impediments to Machine Learning With Seven Sparks from the Causal Revolution”

What more do we need in order to reach a stage where Artificial Intelligence rivals human intelligence?

Consider an eagle’s eyesight

The eye of a golden eagle. Peter Kaminski from San Francisco, California, USA, CC BY 2.0 - its vision system has evolved over a period of millions of years, by taking in a stream of sensory inputs and optimizing its performance.

This is Darwin’s evolutionary process at work, and it’s slow. Most machine learning algorithms operate in this way.

On the other hand, humans were able to invent telescopes and microscopes

The Fitz Telescope at Ann Arbor. Aquillam, CC BY-SA 4.0 in a few thousands of years, which rival or surpass the best vision systems found in nature.

How did this super-evolutionary process happen?

Pearl argues that this was possible because humans have a “blueprint of the environment”, a mental model of how things work, and this helps in imagining alternate universes and hypothetical situations that have not actually happened.

How might we build a system that can answer retrospective, hypothetical “what-if” questions? According to Pearl, just data is not enough, and model-driven reasoning is necessary for strong AI.

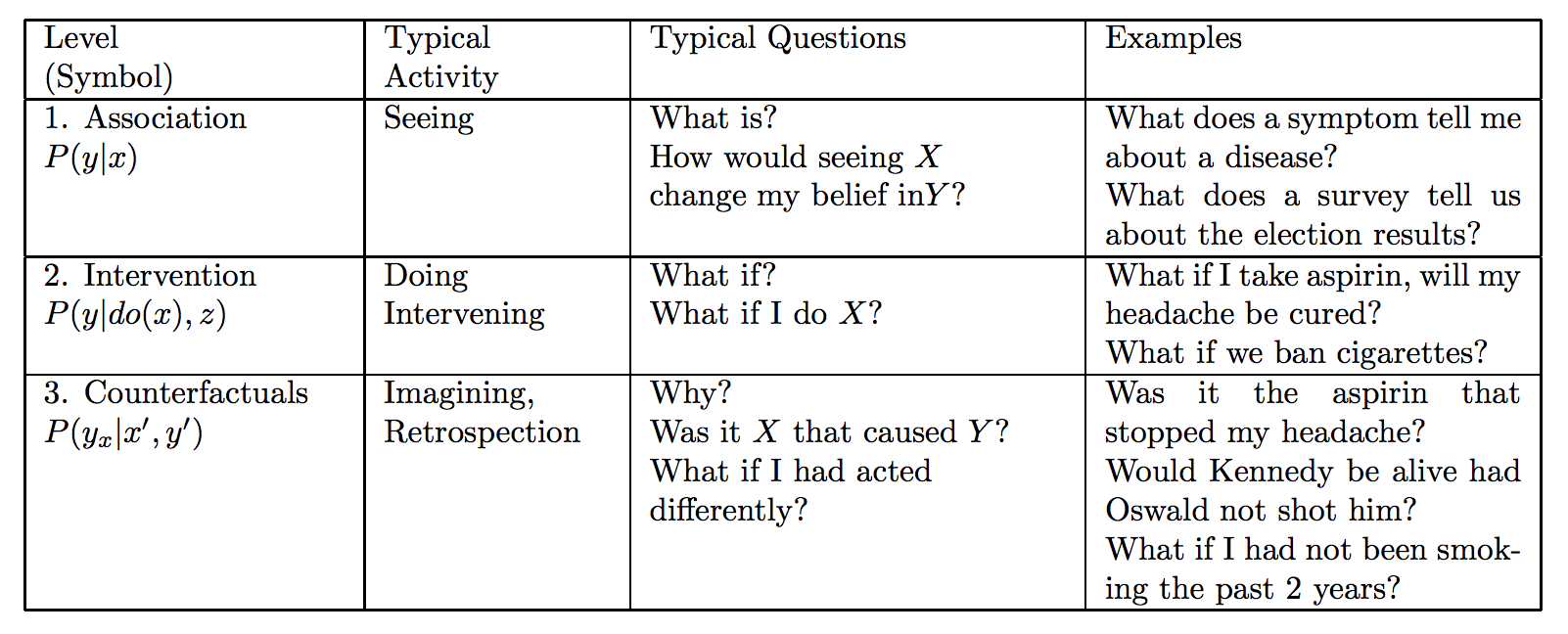

The Causal Hierarchy

Causal theory helps in classifying information into a three level hierarchy. This sharp categorization helps in reasoning over the nature of information required to answer questions from a certain level. Questions at a particular level can be answered only if information from successive levels are available.

- Association questions are inferred from just data, and nothing more.

- Intervention questions involve changing what is observed.

- Counterfactual questions are retrospective, they ask what would have happened if we had made a change.

Counterfactual questions subsume association and intervention questions.

Structural Causal Models (SCM) can answer counterfactual questions. Hence, they have the capacity to answer even intervention and association questions. The theory of Structural Causal Models allows us to formally codify background knowledge, and warns about the limitations of methods that only use data for estimation. Basically, certain classes of questions (specifically interventions and counterfactuals) cannot be answered from data alone.

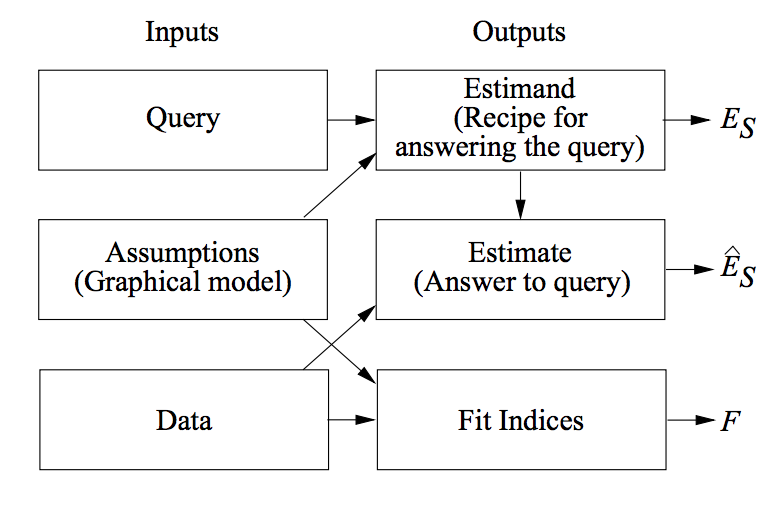

SCM Inference Engine

If we were to build an inference engine using SCM, it would look like this -

The inputs would be query (which is the question that needs to be answered), assumptions (which are usually codified by a directed graph) and data.

The outputs would be Estimand (a recipe or formula to answer the query based on assumptions), Estimate (the answer to the query, which is computed from the estimand and the data) and Fit indices (a measure of compatibility between the data and the assumptions).

Conclusion

There are theoretical limitations to the kinds of questions that can be answered from data alone. It is worthwhile to explore if model-driven reasoning is the missing link to human level artificial intelligence. As Pearl says -

Data alone are hardly a science, regardless how big they get and how skillfully they are manipulated.Judea Pearl, 2018